INSIDE AI #13: Meta Restructures, NVIDIA Huang's Rescue Plan, Take Action: AI Whistleblower Protection Act by NWC

Edition 13

In This Edition:

Key takeaways:

News:

Meta Restructures AI Ops Amid Employee Burnout, Talent Loss - Automates Safety Review to Speed Up Time to Market

Nvidia’s Internal Struggle: Covert Chip Plans to Save Its China Market and Huang’s Trump Outreach

Microsoft, Regulators, and Nonprofits Challenge OpenAI’s Corporate Realignment

Musk Attempted to Block the UAE Data Center Deal if it Didn't Include xAI

Support the Passage of the AI Whistleblower Protection Act

Wondering What to “Look Out For” as A Lab Insider? Updates from AI Lab Watch

Insider Currents

Carefully curated summaries and links to the latest news, spotlighting the voices and concerns emerging from within AI labs.

Meta Restructures AI Ops + Safety Review Changes

In recent weeks, tensions have risen within Meta Platforms’ generative AI group, culminating in a sweeping internal restructuring. According to documents reviewed by The Information, the group recently recorded some of the lowest employee satisfaction scores across the company. Feedback from staff cited deep burnout, internal conflicts, and a lack of focus in feedback to leadership.

In response, Meta leadership acknowledged the exhaustion and gave the team a day off following the launch of the company’s new stand-alone app for its Meta AI assistant, according to three people familiar with the matter. Axios reported that the broader restructuring aims to speed up the rollout of new products and features.

An Exodus of Expertise

At the same time, Meta is witnessing a significant brain drain. As Business Insider reported, several top researchers have exited—many joining Mistral, a high-profile French startup co-founded by former Meta scientists Guillaume Lample and Timothée Lacroix.

The talent loss is stark: of the 14 researchers credited on Meta’s influential 2023 LLaMA paper, only three remain at the company. The upheaval coincides with delays to Meta’s large-scale “Behemoth” AI model and the quiet exit of Joelle Pineau, a key AI research director, after eight years at the company.

Automating Safety Reviews

Perhaps consequentially, internal documents obtained by NPR show Meta plans to automate up to 90% of privacy and integrity reviews—assessments evaluating whether features could harm users, violate privacy, or spread misinformation. Previously, human reviewers examined all major platform changes.

Under the new system, product developers conduct their own risk assessments, with human review reserved for exceptional cases. “Most product managers and engineers are not privacy experts,” said Zvika Krieger, Meta's former director of responsible innovation.

A former Meta executive, speaking anonymously out of fear of retaliation from the company, also warned:

“This process functionally means more stuff launching faster, with less rigorous scrutiny, creating higher risks.”

→ Read: Who’s In Charge of AI at Meta After Shake-Up (paywalled)

→ Read: Meta Plans to Replace Humans with AI to Assess Privacy and Societal Risks

Nvidia’s Internal Struggle: Covert Chip Plans to Save Its China Market and Huang’s Trump Outreach

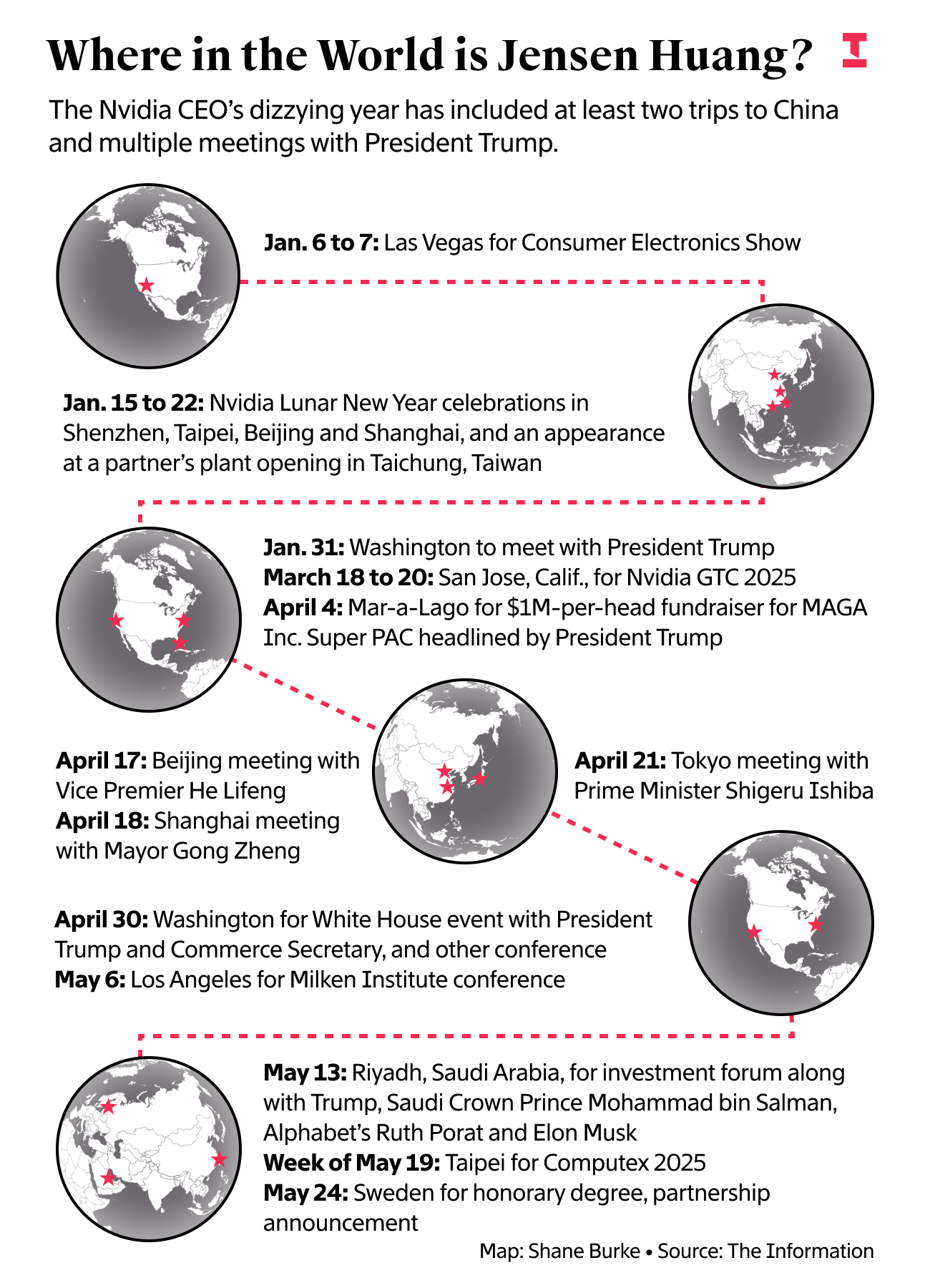

Details from inside Nvidia reveal CEO Jensen Huang has abandoned his traditional hands-off political approach, making undisclosed frequent trips to Mar-a-Lago in a high-stakes bid to salvage the company’s $8 billion China business.

Internal executives report Huang’s prolonged absences from Nvidia’s Santa Clara headquarters: “We see a lot less of Jensen—he’s travelling to Florida a lot.” These Mar-a-Lago meetings with Trump represent a significant shift for a CEO who once even declined White House invitations under Biden, according to a person with direct knowledge of the matter.

Huang needs to find a way to revive Nvidia’s business in China. To do that, he has to carefully balance the interests of the Trump administration, the Chinese government, and Chinese customers — a challenge Huang has been working on since Trump became president, according to interviews with over 20 people, including Nvidia staff, customers, and U.S. and Chinese officials, The Information reported.

China rescue plan:

Emergency internal meetings following the H20 chip ban have produced Nvidia’s covert tentatively called “B30”, A new China-specific chip designed to circumvent export controls. The company is also developing a new computer system centred around its B30 chips, designed to enable Chinese customers to maintain strong performance by connecting the chips to operate together efficiently. Four sources confirm that major Chinese clients, including ByteDance, Alibaba, and Tencent, have expressed interest, with Nvidia promising to produce over 1 million units of B30 this year.

→ Read: Jensen Huang Used to Delegate Politics—Until Trump’s Return (paywalled)

Microsoft, Regulators, and Nonprofits Challenge OpenAI’s Corporate Realignment

OpenAI’s plan to restructure its for-profit subsidiary into a $300 billion public-benefit corporation raises concerns over whether the nonprofit will receive fair compensation and maintain meaningful control. According to people familiar with the matter, the Delaware attorney general is moving to hire an investment bank to independently assess the value of the nonprofit parent’s equity in the new entity, The Wall Street Journal reported. Microsoft, which has the power to block the deal effectively, is currently at odds with OpenAI over its equity share in the new structure; both companies have hired investment banks to advise them.

According to The Information, critics, including a coalition of over 60 nonprofits and the group Not for Private Gain, are warning that the restructuring could shortchange the charity and are urging regulators to intervene. Meanwhile, OpenAI has hired well-connected Democratic operatives to navigate California’s political landscape, signalling a focus on managing opposition rather than addressing core ethical and governance issues.

According to the articles, OpenAI is under intense financial and geopolitical pressure, with $20 billion in SoftBank funding at stake if the restructuring isn’t finalized by year’s end. The company is also preparing to launch an “open” model, seen as a response to China’s growing lead in the space. The outcome of this governance battle will shape whether its developments prioritize public benefit or private gain.

→ Read: OpenAI’s New Path to Conversion Faces Activist Opposition (paywalled)

→ Read: Delaware AG Hiring Investment Bank to Advise on OpenAI Conversion (paywalled)

→ Read: OpenAI’s Democrats in Action; China’s Open-Source AI (paywalled)

Musk Attempted to Block the UAE Data Center Deal if it Didn’t Include xAI

Prior to Trump’s mid-May diplomatic tour of three Gulf nations, Musk discovered that Sam Altman would be participating in the trip and that negotiations for a UAE-based project were underway. According to anonymous White House officials, Musk expressed concerns about the arrangement and requested to join the delegation.

Then, on a call with officials at G42, the AI firm controlled by the brother of the U.A.E.'s president, Musk had a decree for those assembled: Their plan had no chance of approval unless xAI was included in the deal. Despite Musk’s complaints, Trump and U.S. officials signed off on the deal terms and decided to move forward with the project. White House aides then discussed how to calm Musk down because Trump wanted to announce the deal before the end of the trip.

The plan in question is a site that could eventually hold a five-gigawatt cluster of AI data centres. This size would be far greater than any single site in the U.S. and would host various U.S. AI company's servers. Musk’s xAI has been seen as a likely candidate for future sites at the giant data centre cluster.

→ Read: Elon Musk Tried to Block Sam Altman’s Big AI Deal in the Middle East (paywalled)

Announcements & Call to Action

Updates on publications, community initiatives, and “call for topics” that seek contributions from experts addressing concerns inside Frontier AI.

US Citizens, Contact Congress: Pass AI Whistleblower Protection

“AI Whistleblowers are facing severe retaliation for speaking out against safety and security failures which are threatening the privacy of Americans and compromising national security”, wrote NWC.

The recently introduced AI Whistleblower Protection Act includes anti-retaliation provisions for AI whistleblowers and establishes clear reporting guidelines for the Department of Labor regarding AI security vulnerabilities. This legislation represents a critical response to the urgent need for AI employee protection. Its passage would mark a landmark turning point in increasing regulation, transparency, and oversight within the AI industry.

Take action today by contacting your Representatives and Senators to call for the passage of this critical bill!

→ Read About the Campaign and Contact Your Representatives or Senators

Need a refresher on what this is about?

A bipartisan coalition led by Senator Chuck Grassley has introduced legislation that could make tech workers the primary guardians of AI safety. The AI Whistleblower Protection Act (AIWPA), introduced in May, would protect employees who report AI security vulnerabilities or violations.

Who is protected?

The law offers protection to current and former employees, as well as contractors working at AI companies. To be protected, individuals don’t need to prove that a law was definitively broken—they just need to raise their concerns in good faith.

What protections do workers have?

The law protects workers from being fired, demoted, threatened, or harassed for reporting issues. If an employer retaliates, workers have several options:

File a complaint with the U.S. Department of Labor

Take legal action in federal court

Be reinstated to their job

Recover double their lost wages

Receive compensation for damages

Employers cannot require workers to waive these rights through contracts or forced arbitration.

What’s missing?

The article written by Sophie Luskin in Tech Policy Press points out that the law could be strengthened by also protecting employees who report when their company fails to follow its own internal safety policies, not just violations of federal regulations.

This legislation follows a broader trend—Congress has enacted similar whistleblower protections in rapidly evolving industries such as nuclear energy, aviation, and finance.

Meanwhile, whistleblower advocate Poppy Alexander warns that placing regulatory burden solely on individual employees is problematic:

“Being a whistleblower is hard enough. If we move toward a protection-as-regulation model, whistleblowers will be put under even more pressure as they become the only obstacle to AI’s boundless growth.”

→ Read: What the AI Whistleblower Protection Act Would Mean for Tech Workers

→ Read: AI Whistleblowers Can’t Carry the Burden of Regulating Industry (paywalled)

“What Concerning Behaviour Should I be Looking Out for?” - A Common Question We Hear From Frontier AI Insiders

Besides complex answers, a first straightforward answer to that question is:

“Whenever your company is violating its own commitments or promises”.

Or, slightly more complicated:

has updated its resources to help answer this question:“Whenever your lab is significantly lacking vs. industry best practices”.

→ Find an overview of current lab commitments to identify if and where your lab may not be holding up to them.

And subscribe to the Substack here:

Thank you for trusting OAISIS as your source for insights on protecting and empowering insiders who raise concerns within AI labs.

Your feedback is crucial to our mission. We invite you to share any thoughts, questions, or suggestions for future topics so that we can collaboratively enhance our understanding of the challenges and risks faced by those within AI labs. Together, we can continue to amplify and safeguard the voices of those working within AI labs who courageously address the challenges and risks they encounter.

If you found this newsletter valuable, please consider sharing it with colleagues or peers who are equally invested in shaping a safe and ethical future for AI.

Until next time,

The OAISIS Team